Viruses have existed as long as life has been on earth.

Early references to viruses

Early references to viral infections include Homer’s mention of “rabid dogs”. Rabies is caused by a virus affecting dogs. This was also known in Mesopotamia.

Polio is also caused by a virus. It leads to paralysis of the lower limbs. Polio may also be witnessed in drawings from ancient Egypt.

In addition, small pox caused by a virus that is now eradicated from the world also has a significant role in history of S. and Central America.

Virology – the study of viruses

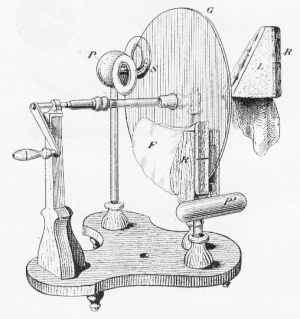

The study of viruses is called virology. Experiments on virology began with the experiments of Jenner in 1798. Jenner did not know the cause but found that that individuals exposed to cow pox did not suffer from small pox.

He began the first known form of vaccination with cow pox infection that prevented small pox infection in individuals. He had not yet found the causative organism or the cause of the immunity as yet for either cow pox or small pox.

Koch and Henle

Koch and Henle founded their postulates on microbiology of disease. This included that:

the organism must regularly be found in the lesions of the disease

it must be isolated from diseased host and grown in pure culture

inoculation of such a pure organism into a host should initiate the disease and should be recovered from the secondarily infected organism as well

Viruses do not confer to all of these postulates.

Louis Pasteur

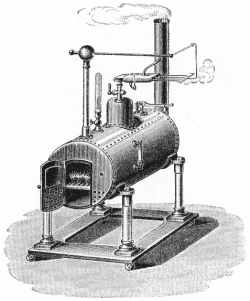

In 1881-1885 Louis Pasteur first used animals as model for growing and studying viruses. He found that the rabies virus could be cultured in rabbit brains and discovered the rabies vaccine. However, Pasteur did not try to identify the infectious agent.

The discovery of viruses

1886-1903 – This period was the discovery period where the viruses were actually found. Ivanowski observed/looked for bacteria like substance and in 1898, Beijerink demonstrated filterable characteristic of the virus and found that the virus is an obligate parasite. This means that the virus is unable to live on its own.

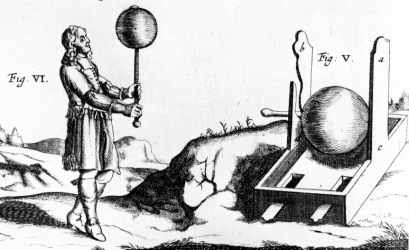

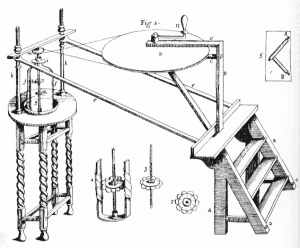

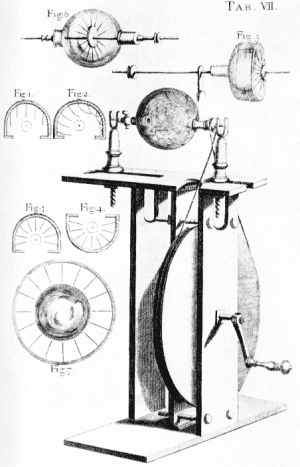

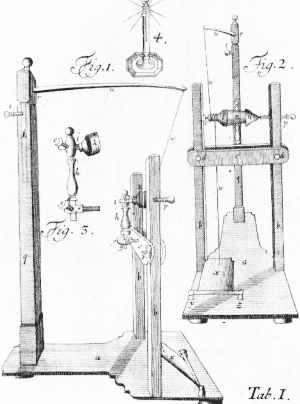

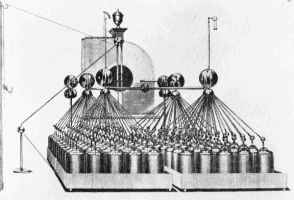

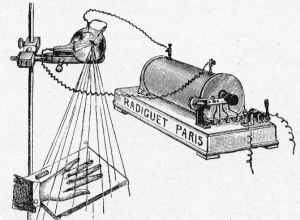

Charles Chamberland and filterable agents

In 1884, the French microbiologist Charles Chamberland invented a filter with pores smaller than bacteria. Chamberland filter-candles of unglazed porcelain or made of diatomaceous earth (clay)-kieselguhr had been invented for water purification. These filters retained bacterium, and had a pore size of 0.1-0.5 micron. Viruses were filtered through these and called “filterable” organisms. Loeffler and Frosch (1898) reported that the infectious agent of foot and mouth diseases virus was a filterable agent.

In 1900 first human disease shown to be caused by a filterable agent was Yellow Fever by Walter Reed. He found the yellow fever virus present in blood of patients during the fever phase. He also found that the virus spread via mosquitoes. In 1853 there was an epidemic in New Orleans and the rate of mortality from this infection was as high as 28%. Infectivity was controlled by destroying mosquito populations

Trapping viruses

In the 1930's Elford developed collodion membranes that could trap the viruses and found that viruses had a size of 1 nano meter. In 1908, Ellerman and Bang demonstrated that certain types of tumors (leukemia of chicken) were caused by viruses. In 1911 Peyton Rous discovered that non-cellular agents like viruses could spread solid tumors. This was termed Rous Sarcoma virus (RSV).

Bacteriophages

The most important discovery was that of the Bacteriophage era. In 1915 Twort was working with vaccinia virus and found that the viruses grew in cultures of bacteria. He called then bacteriophage. Twort abandoned this work after World War I. In 1917, D'Herelle, a Canadian, also found similar bacteriophages.

Images of viruses

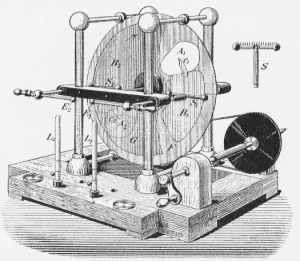

In 1931 the German engineers Ernst Ruska and Max Knoll found electron microscopy that enabled the first images of viruses. In 1935, American biochemist and virologist Wendell Stanley examined the tobacco mosaic virus and found it to be mostly made from protein. A short time later, this virus was separated into protein and RNA parts. Tobacco mosaic virus was the first one to be crystallised and whose structure could therefore be elucidated in detail.

Molecular biology

Between 1938 and 1970 virology developed by leaps and bounds into Molecular biology. The 1940's and 1950's was the era of the Bacteriophage and the animal virus.

Delbruck considered father of modern molecular biology. He developed the concepts of virology in the science. In 1952 Hershey and Chase showed that it was the nucleic acid portion that was responsible for the infectivity and carried the genetic material.

In 1954 Watson and Crick found the exact structure of DNA. Lwoff in 1949 found that virus could behave like a bacterial gene on the chromosome and also found the operon model for gene induction and repression. Lwoff in 1957 defined viruses as potentially pathogenic entities with an infectious phase and having only one type of nucleic acid, multiplying with their genetic material and unable to undergo binary fission.

In 1931, American pathologist Ernest William Goodpasture grew influenza and several other viruses in fertilised chickens' eggs. In 1949, John F. Enders, Thomas Weller, and Frederick Robbins grew polio virus in cultured human embryo cells, the first virus to be grown without using solid animal tissue or eggs. This enabled Jonas Salk to make an effective polio vaccine.

Era of polio research was next and was very important as in 1953 the Salk vaccine was introduced and by 1955 poliovirus had been crystallized. Later Sabin introduced attenuated polio vaccine.

In the 1980’s cloning of viral genes developed, sequencing of the viral genomes was successful and production of hybridomas was a reality. The AIDS virus HIV came next in the 1980’s. Further uses of viruses in gene therapy developed over the next two decades.

Early references to viruses

Early references to viral infections include Homer’s mention of “rabid dogs”. Rabies is caused by a virus affecting dogs. This was also known in Mesopotamia.

Polio is also caused by a virus. It leads to paralysis of the lower limbs. Polio may also be witnessed in drawings from ancient Egypt.

In addition, small pox caused by a virus that is now eradicated from the world also has a significant role in history of S. and Central America.

Virology – the study of viruses

The study of viruses is called virology. Experiments on virology began with the experiments of Jenner in 1798. Jenner did not know the cause but found that that individuals exposed to cow pox did not suffer from small pox.

He began the first known form of vaccination with cow pox infection that prevented small pox infection in individuals. He had not yet found the causative organism or the cause of the immunity as yet for either cow pox or small pox.

Koch and Henle

Koch and Henle founded their postulates on microbiology of disease. This included that:

the organism must regularly be found in the lesions of the disease

it must be isolated from diseased host and grown in pure culture

inoculation of such a pure organism into a host should initiate the disease and should be recovered from the secondarily infected organism as well

Viruses do not confer to all of these postulates.

Louis Pasteur

In 1881-1885 Louis Pasteur first used animals as model for growing and studying viruses. He found that the rabies virus could be cultured in rabbit brains and discovered the rabies vaccine. However, Pasteur did not try to identify the infectious agent.

The discovery of viruses

1886-1903 – This period was the discovery period where the viruses were actually found. Ivanowski observed/looked for bacteria like substance and in 1898, Beijerink demonstrated filterable characteristic of the virus and found that the virus is an obligate parasite. This means that the virus is unable to live on its own.

Charles Chamberland and filterable agents

In 1884, the French microbiologist Charles Chamberland invented a filter with pores smaller than bacteria. Chamberland filter-candles of unglazed porcelain or made of diatomaceous earth (clay)-kieselguhr had been invented for water purification. These filters retained bacterium, and had a pore size of 0.1-0.5 micron. Viruses were filtered through these and called “filterable” organisms. Loeffler and Frosch (1898) reported that the infectious agent of foot and mouth diseases virus was a filterable agent.

In 1900 first human disease shown to be caused by a filterable agent was Yellow Fever by Walter Reed. He found the yellow fever virus present in blood of patients during the fever phase. He also found that the virus spread via mosquitoes. In 1853 there was an epidemic in New Orleans and the rate of mortality from this infection was as high as 28%. Infectivity was controlled by destroying mosquito populations

Trapping viruses

In the 1930's Elford developed collodion membranes that could trap the viruses and found that viruses had a size of 1 nano meter. In 1908, Ellerman and Bang demonstrated that certain types of tumors (leukemia of chicken) were caused by viruses. In 1911 Peyton Rous discovered that non-cellular agents like viruses could spread solid tumors. This was termed Rous Sarcoma virus (RSV).

Bacteriophages

The most important discovery was that of the Bacteriophage era. In 1915 Twort was working with vaccinia virus and found that the viruses grew in cultures of bacteria. He called then bacteriophage. Twort abandoned this work after World War I. In 1917, D'Herelle, a Canadian, also found similar bacteriophages.

Images of viruses

In 1931 the German engineers Ernst Ruska and Max Knoll found electron microscopy that enabled the first images of viruses. In 1935, American biochemist and virologist Wendell Stanley examined the tobacco mosaic virus and found it to be mostly made from protein. A short time later, this virus was separated into protein and RNA parts. Tobacco mosaic virus was the first one to be crystallised and whose structure could therefore be elucidated in detail.

Molecular biology

Between 1938 and 1970 virology developed by leaps and bounds into Molecular biology. The 1940's and 1950's was the era of the Bacteriophage and the animal virus.

Delbruck considered father of modern molecular biology. He developed the concepts of virology in the science. In 1952 Hershey and Chase showed that it was the nucleic acid portion that was responsible for the infectivity and carried the genetic material.

In 1954 Watson and Crick found the exact structure of DNA. Lwoff in 1949 found that virus could behave like a bacterial gene on the chromosome and also found the operon model for gene induction and repression. Lwoff in 1957 defined viruses as potentially pathogenic entities with an infectious phase and having only one type of nucleic acid, multiplying with their genetic material and unable to undergo binary fission.

In 1931, American pathologist Ernest William Goodpasture grew influenza and several other viruses in fertilised chickens' eggs. In 1949, John F. Enders, Thomas Weller, and Frederick Robbins grew polio virus in cultured human embryo cells, the first virus to be grown without using solid animal tissue or eggs. This enabled Jonas Salk to make an effective polio vaccine.

Era of polio research was next and was very important as in 1953 the Salk vaccine was introduced and by 1955 poliovirus had been crystallized. Later Sabin introduced attenuated polio vaccine.

In the 1980’s cloning of viral genes developed, sequencing of the viral genomes was successful and production of hybridomas was a reality. The AIDS virus HIV came next in the 1980’s. Further uses of viruses in gene therapy developed over the next two decades.